Industrial plants and facilities often produce big data, which can be distributed and analyzed to improve operations and maintenance and to reduce repair costs. But securely getting this data to the people who need it can be complex. Many options are available, including:

- In-house systems

- Virtual private networks (VPNs)

- Cloud for data transmission only

- Cloud for hosting end-user or vendor systems

Benson Hougland is vice president of marketing and product strategy for Opto 22 and has 30 years of experience in IT and industrial automation. His 2014 TEDx Talk, available at https://plnt.sv/1805-AZ, introduces nontechnical people to the IoT. He can be reached at [email protected].

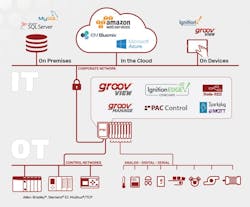

Before exploring these options, let’s examine characteristics common to all. Big data is generated by wired and wireless sensors, transmitters and analyzers installed in plants and facilities. These field devices acquire data and typically connect to inputs of industrial controllers, such as programmable logic controllers (PLCs), programmable automation controllers (PACs) and edge programmable industrial controllers, or EPICs (see Figure 1).

Industrial controllers not only obtain raw data from field devices, but also they process it to filter out anomalies and analyze it to derive more useful information. Most industrial controllers have some data storage capacity built in, but it’s common to connect these controllers—either directly or through HMI software—to PC-based software platforms. These platforms may be data historians for storing large amounts of data or other database types.

Knowing how big data is initially acquired and processed, let’s look at options for accessing it.

In-house systems

With this option, a plantwide intranet connects each user’s PC, and a plantwide Wi-Fi network may also connect laptops, smartphones, and tablets. The plant’s information technology (IT) personnel usually provide the required infrastructure, including PCs, networking hardware, software platforms, and so on.

In-house systems are often the best approach for companies with just a single plant or facility. This is particularly the case when support for plant operations is provided exclusively by in-house personnel.

Although this option appears simple, it requires IT staff for installation and support, and it does not provide data access for personnel outside the plant. Also, while IT teams are well-suited for administering the networking and data storage infrastructure, they are often far less familiar with specific operations technology (OT) software, protocols, and process historians. An in-house system therefore requires close cooperation between IT and OT personnel.

VPNs

VPNs are an established and secure technology frequently employed by IT personnel to interconnect remote locations over public networks, such as the internet. This method lends itself well to big-data storage and access applications, particularly for very large files, such as videos.

When data access is required by personnel outside the plant, VPNs are often used to extend the plant’s in-house network. VPN solutions do carry some of the same downsides as in-house systems, and they present additional challenges as well.

Both the local and remote ends of each VPN link must be coordinated and maintained by IT, which may be logistically challenging. Furthermore, configuring and maintaining a VPN can be quite complex, both initially and throughout the life of the system.

Cloud for data transmission only

Using the cloud for data transmission offloads IT-related tasks, allowing plant OT personnel to focus on improving operations (Figure 2). This is made possible by using open protocols optimized for exactly these kinds of data transfers.

For instance, message queuing telemetry transport (MQTT) is an ISO standard (ISO/IEC PRF 20922) publish-subscribe messaging protocol. MQTT is ideal for industrial data gathering because it is lightweight and efficient and because it securely transfers data even with slow or unreliable networks.

The source data from controllers or HMIs is published once to a broker (also called a server) and then retransmitted to subscribing clients, such as PCs, smartphones, tablets, or software applications. MQTT is readily combined with the open-source Sparkplug specification, which defines a data messaging format suitable for encoding the types of data produced by industrial plants and facilities.

With MQTT/Sparkplug architectures, all communications are device-originating, bidirectional, and secure. This dramatically reduces reliance on IT departments for configuring and maintaining these systems, as all data communications begin from the controller and are outbound. With strictly outbound communications, no ports need to be opened on a firewall or on the controller, eliminating a primary cyberattack vector.

Another open-source technology, Node-RED, helps end users easily configure data flows from edge devices all the way up to the cloud and back. This browser-based programming tool lets users visually link prebuilt function blocks (called nodes) and define data communication paths.

MQTT, Sparkplug, and Node-RED do much of the heavy lifting for end users looking to simply and securely move data from multiple plants or facilities to many users. The main requirement is support for these open-source technologies by the hardware at the data origination site via either a controller or an HMI.

Cloud for hosting end-user or vendor systems

The cloud can also be used to host data access applications. Instead of provisioning and maintaining on-premises systems, users can let the cloud provider handle these chores.

Popular cloud computing platforms such as Amazon Web Services, Microsoft Azure, and IBM Watson offer robust and scalable architectures where users can develop and deploy cloud-based applications. This approach requires a considerable amount of IT expertise in addition to the expense of creating or purchasing the data access software hosted in the cloud.

This article is part of our monthly Automation Zone column. Read more from our monthly Automation Zone series.

Alternatively, some vendors provide complete cloud-hosted data access solutions. This approach is more expensive but provides a turnkey service, with little IT expertise required by the end user. A concern is the total reliance on the vendor, as the vendor controls all data flows into and out of the cloud. Often, it’s a smaller company with just a few years of experience.

End users at industrial sites are already often awash in big data, and every new equipment installation tends to increase the number of data sources. Users want to capture and access this data to gain value and visibility, but many facilities must achieve this goal using internal personnel and can’t afford extensive IT custom development and architectures. The combination of the right controller or HMI with support for various open-source protocols offers a powerful and compelling option for end users to easily and securely access remote data.